Real-time 3D Human Pose and Motion Reconstruction from Monocular RGB Videos

Anastasios Yiannakides, Andreas Aristidou, Yiorgos Chrysanthou

Comp. Animation & Virtual Worlds, 30(3-4), 2019.

Proceedings of Computer Animation and Social Agents - CASA'19

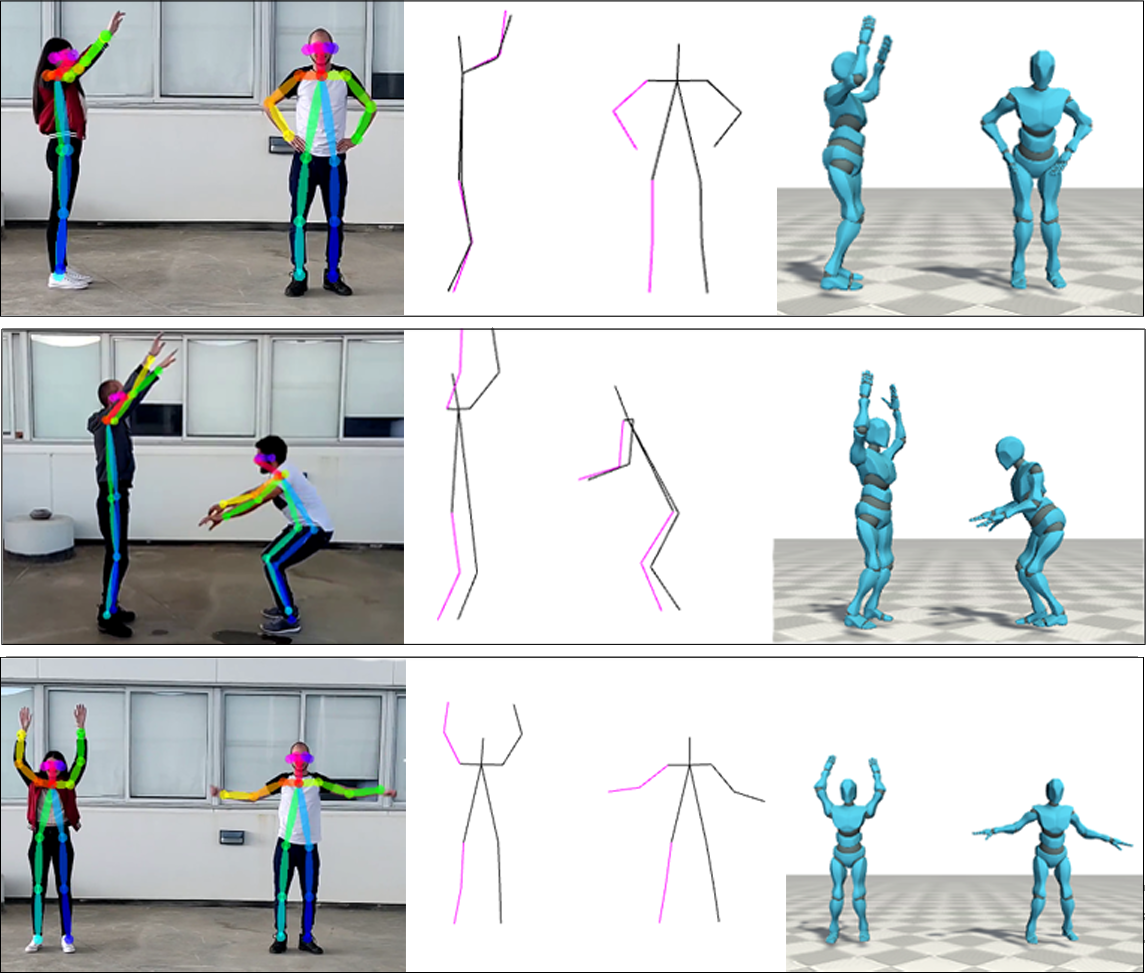

In this paper, we present a method that reconstructs articulated human motion, taken from a monocular RGB camera. Our method fits 2D deep estimated poses of multiple characters, with the 2D multi-view joint projections of 3D motion data, to retrieve the 3D body pose of the tracked character. By taking into consideration the temporal consistency of motion, it generates natural and smooth animations, in real-time, without bone length violations.

Abstract

Real-time 3D pose estimation is of high interest in interactive applications, virtual reality, activity recognition, but most importantly, in the growing gaming industry. In this work, we present a method that captures and reconstructs the 3D skeletal pose and motion articulation of multiple characters using a monocular RGB camera. Our method deals with this challenging, but useful, task by taking advantage of the recent development in deep learning that allows 2D pose estimation of multiple characters, and the increasing availability of motion capture data. We fit 2D estimated poses, extracted from a single camera via OpenPose, with a 2D multi-view joint projections database that is associated with their 3D motion representations. We then retrieve the 3D body pose of the tracked character, ensuring throughout that the reconstructed movements are natural, satisfy the model constraints, are within a feasible set, and are temporally smooth without jitters. We demonstrate the performance of our method in several examples, including human locomotion, simultaneously capturing of multiple characters, and motion reconstruction from different camera views.

The main contributions of this work are:

- It fits 2D deep estimated poses, taken from a single, monocular camera, with the 2D multi-view joint projections of 3D motion data, to retrieve the 3D body pose of the tracked character.

- It takes advantage of the recent advances in deep and convolutional networks that allow 2D pose estimation of multiple characters, and the large (and increasing) availability of motion capture data.

More particularly, our method infers the 3D human poses in real-time using only data from a single video stream. To deal with the limitations of the prior work, such as the bone length constraints violations, the simultaneously capturing of multiple characters, and the temporal consistency of the reconstructed skeletons, we generate a database with numerous 2D projections by rotating, a small angle at a time, the yaw axis of 3D skeletons. Then, we match the input 2D poses (which are extracted from a single video stream using the OpenPose network) with the projections on the database, and retrieve the best 3D skeleton pose that is temporally consistent to the skeleton of the previous frames, producing natural and smooth motion.

.

Acknowlegments

This work has been supported by the RESTART 2016-2020 Programmes for Technological Development and Innovation, through the Cyprus Research Promotion Foundation, with protocol number P2P/JPICH\_DH/0417/0052. It has also been partly supported by the project that has received funding from the European Union's Horizon 2020 Research and Innovation Programme under Grant Agreement No 739578 (RISE-Call: H2020-WIDESPREAD-01-2016-2017-TeamingPhase2) and the Government of the Republic of Cyprus through the Directorate General for European Programmes, Coordination and Development.

© 2025 Andreas Aristidou