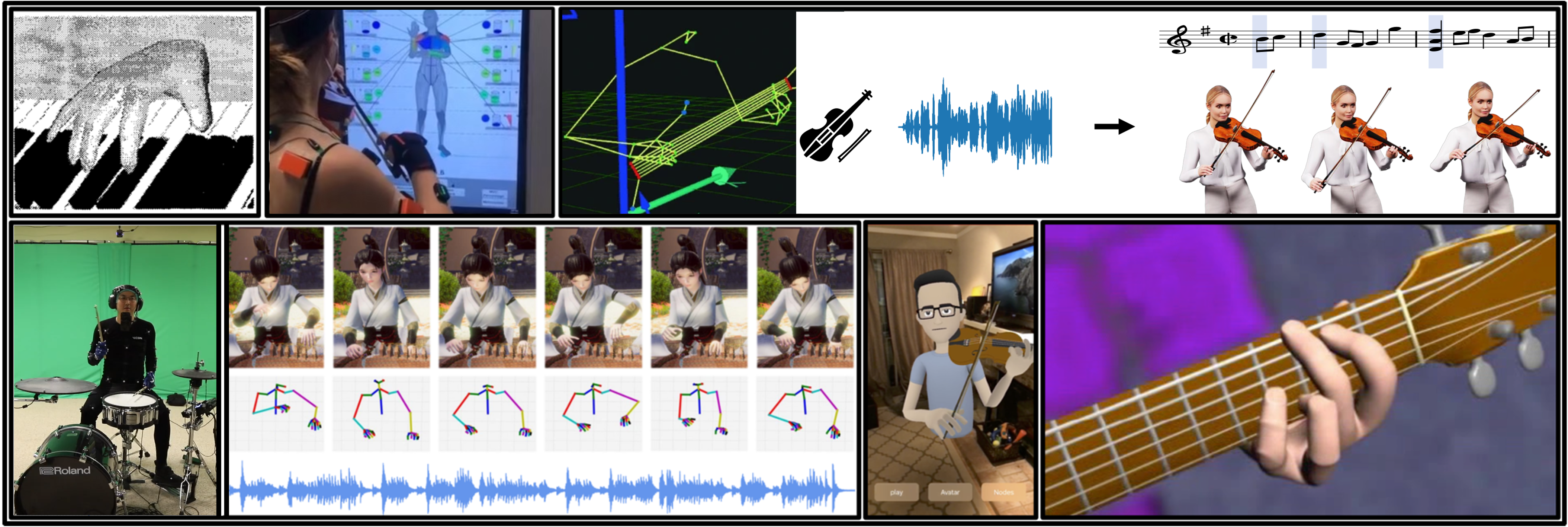

HAMLET: Human-centred generative Ai fraMework for culturaL industriEs’ digital Transition

HAMLET aims to democratize access to Generative AI for Europe's Cultural and Creative Industries (CCIs), enabling entities of all sizes to benefit from AI for digital transition. It integrates a collaborative platform and AI tools to foster shared investments, collaboration and innovation, while promoting sustainable and inclusive frameworks for creative industries.

![Portrait of [Student Name]](students/Theodoros_Kyriakou.jpg)